In the ever-evolving landscape of digital marketing, data-driven decision-making is paramount. A/B testing has emerged as a crucial tool for optimizing campaigns and maximizing ROI. This comprehensive guide will delve into the intricacies of A/B testing in digital marketing, providing you with the knowledge and strategies to enhance your website conversions, improve email marketing effectiveness, and refine your overall marketing strategy. From understanding the fundamental principles of A/B testing to implementing advanced techniques, this resource will equip you with the tools you need to succeed.

Whether you are a seasoned marketer or just beginning your journey, understanding A/B testing is essential for achieving optimal results in the competitive digital sphere. This guide will cover various aspects of A/B testing, including formulating testable hypotheses, identifying key performance indicators (KPIs), designing effective experiments, analyzing results, and implementing winning variations. By mastering A/B testing methodologies, you can unlock the full potential of your digital marketing efforts and achieve significant improvements in your campaigns. This guide offers a practical and actionable approach to A/B testing, enabling you to make informed decisions and drive meaningful growth.

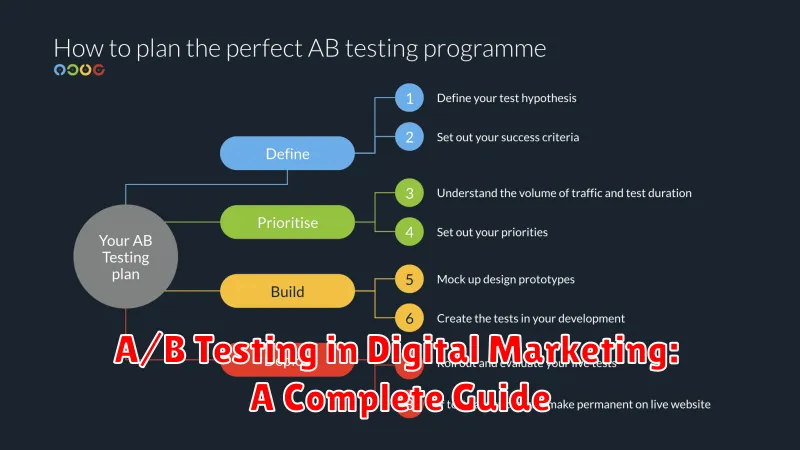

Understanding A/B Testing

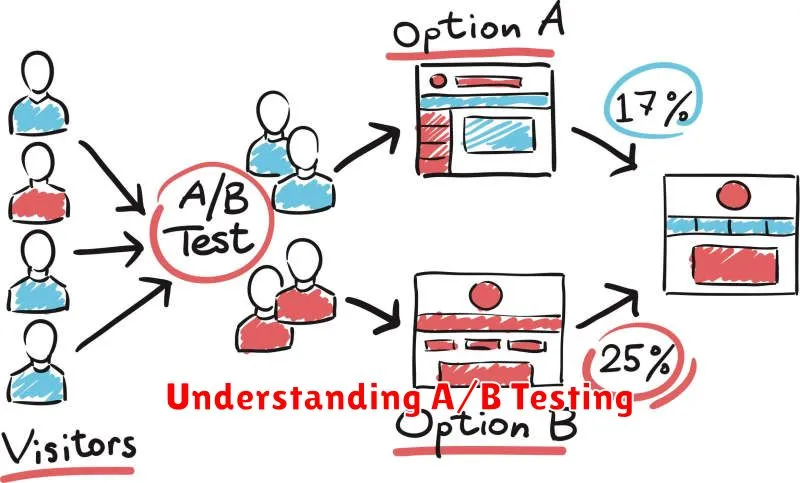

A/B testing, also known as split testing, is a fundamental method in digital marketing used to compare two versions of a webpage, email, or other marketing asset to determine which performs better.

The process involves creating two variations (A and B) of a single element, such as a headline, call-to-action button, or image. A randomly selected portion of your audience is then shown version A, while the remaining audience sees version B. Key performance indicators (KPIs) are tracked for each version.

By analyzing the resulting data, marketers can identify which variation leads to higher conversion rates, improved click-through rates, or other desired outcomes. This data-driven approach allows for informed decisions about website optimization and marketing campaign effectiveness.

The goal of A/B testing is to optimize content and improve the user experience, ultimately leading to increased conversions and better business results. It is an iterative process, with ongoing testing and refinement contributing to continuous improvement.

Choosing the Right Variable to Test

A crucial step in A/B testing is selecting the right variable to manipulate. A well-chosen variable directly impacts key metrics and provides valuable insights. Focusing on elements with the highest potential for improvement is essential for maximizing your ROI. Don’t try to test everything at once; isolate individual variables for clear, actionable results.

Consider testing high-impact elements such as calls to action, headlines, images, and form fields. Small changes in these areas can often lead to significant improvements in conversion rates. For example, testing a different color or phrasing for your call to action button could significantly influence click-through rates.

Prioritize variables based on your specific goals and data analysis. Analyze user behavior using website analytics to identify areas of friction or drop-off. For instance, if users are abandoning their carts frequently, testing different checkout processes might be a worthwhile endeavor.

Creating Control vs Variation

A crucial step in A/B testing is establishing a clear control and variation. The control is the original version of your webpage, email, or advertisement – the baseline against which you’ll measure performance. The variation is the modified version where you’ve implemented a specific change.

This change should be isolated to a single element to accurately attribute any observed differences in results. For instance, if you’re testing the effectiveness of a call-to-action button, only the button’s color, text, or placement should be altered in the variation, keeping all other aspects identical to the control.

Careful planning and execution in creating your control and variation ensures the validity and reliability of your A/B test results. This allows you to confidently identify the impact of specific changes and make informed decisions to optimize your marketing campaigns.

Analyzing Test Results

After your A/B test has run its course, the next crucial step is analyzing the results. This involves determining whether the observed differences between your variations are statistically significant and not due to random chance.

Start by examining the key performance indicators (KPIs) you defined earlier. These might include conversion rates, click-through rates, bounce rates, or average order value. Compare the performance of your variations against the control. Look for significant differences in the KPIs.

Statistical significance helps you determine if the observed changes are likely due to the modifications you made or just random fluctuations. A common threshold for statistical significance is a p-value of 0.05 or less. This means there’s a 5% chance or less that the observed results are due to random chance.

It’s important to consider the confidence interval, which provides a range of values within which the true difference in performance likely lies. A narrower confidence interval indicates a higher degree of certainty about the results.

Tools for A/B Testing

Selecting the right A/B testing tool is crucial for effective experimentation. Several platforms offer diverse features and capabilities to suit various needs and budgets. Here’s a brief overview of some popular options:

Popular A/B Testing Platforms

- Google Optimize: A freemium tool integrated with Google Analytics, offering robust experimentation features for websites and apps.

- Optimizely: A comprehensive platform with advanced targeting and personalization options, suitable for enterprise-level testing.

- VWO (Visual Website Optimizer): Provides a visual editor for easy experiment creation and offers a range of testing capabilities.

- AB Tasty: A user-friendly platform with features for client-side and server-side testing, along with AI-powered personalization.

When choosing a tool, consider factors like ease of use, integration with existing analytics platforms, advanced features (e.g., multivariate testing, personalization), and pricing.

Common Mistakes to Avoid

A/B testing, while powerful, can be easily misused. Avoiding these common pitfalls will significantly improve the reliability and effectiveness of your tests.

Insufficient Sample Size

Testing with too few participants leads to statistically insignificant results. Ensure a large enough sample size to confidently determine a winner.

Testing Too Many Elements Simultaneously

Changing multiple elements at once makes it difficult to isolate the variable responsible for changes in performance. Test one element at a time for clear insights.

Short Testing Duration

Running tests for too short a period can skew results due to daily fluctuations in traffic and user behavior. Allow sufficient time for the test to reach statistical significance.

Ignoring Statistical Significance

Don’t declare a winner based solely on observed differences. Ensure the results are statistically significant to avoid implementing changes based on random chance.